Short Introduction to This Paper

This paper proposes a novel approach for discovering bugs in fault-tolerant data management systems: lineage-driven fault injection. A lineage-driven fault injector reasons backwards from correct system outcomes to determine whether failures in the execution could have prevented the outcome. The authors present MOLLY, a prototype of lineage-driven fault injection that exploits a novel combination of data lineage techniques from the database literature and state-of-the-art satisfiability testing. If fault-tolerance bugs exist for a particular configuration, MOLLY finds them rapidly, in many cases using an order of magnitude fewer executions than random fault injection. Otherwise, MOLLY certifies that the code is bug-free for that configuration.

Highlights of This Paper

- A novel top-down strategy for discovering bugs in distributed data management systems: lineage-driven fault injection (LDFI)

- MOLLY, an implementation of LDFI. Like fault injection, MOLLY finds bugs in large-scale, complex distributed systems quickly, in many cases using an order of magnitude fewer executions than a random fault injector

Key Infomation

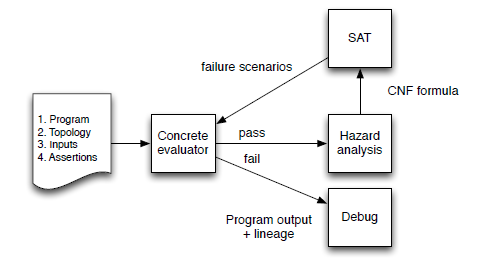

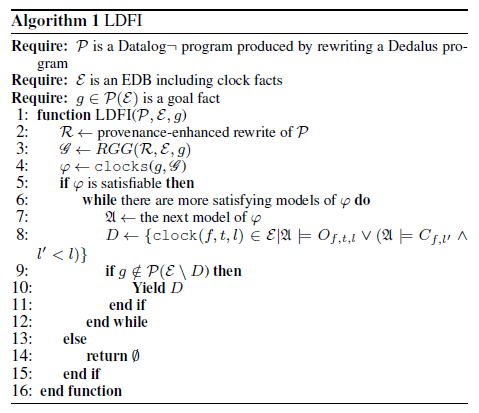

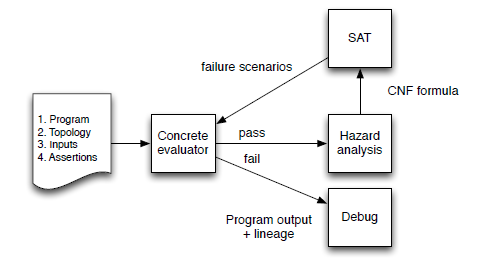

Figure 1: The MOLLY system performs forward/backward alternation: forward steps simulate concrete distributed executions with failures to produce lineage-enriched outputs, while backward steps use lineage to search for failure combinations that could falsify the outputs. MOLLY alternates between forward and backward steps until it has exhausted the possible falsifiers.

- LDFI is inspired by the database literature notion of data lineage, which allows it to directly connect system outcomes to the data and messages that led to them. LDFI uses data lineage to reason backwards (from effects to causes) about whether a given correct outcome could have failed to occur due to some combination of faults. Rather than generating faults at random (or using application-specific heuristics), a lineage-driven fault injector chooses only those failures that could have affected a known good outcome, exercising fault-tolerance code at increasing levels of complexity. Injecting faults in this targeted way allows LDFI to provide completeness guarantees like those achievable with formal methods such as model checking, which have typically been used to verify small protocols in a bottom-up fashion. When bugs are encountered, LDFI’s topdown approach provides—in addition to a counterexample trace fine-grained data lineage visualizations to help programmers understand the root cause of the bad outcome and consider possible remediation strategies

- Like formal methods, MOLLY finds all of the bugs that could be triggered by failures: when a MOLLY execution completes without counterexamples it certifies that no fault-tolerance bugs exist for a given configuration. MOLLY integrates naturally with root-cause debugging by converting counterexamples into data lineage visualizations.

- MOLLY quickly identifies 7 critical bugs in 14 fault-tolerant systems, for the remaining 7 systems, it provides a guarantee that no invariant violations exist up to a bounded execution depth, an assurance that state-of-the-art fault injectors cannot provide

Limitations

- While MOLLY can be used to find bugs in fundamentally non-deterministic protocols like anti-entropy or randomized consensus, certifying such protocols as bug-free will require additional research

- An abstraction that factors apart partial failure and asynchrony is fundamentally incomplete, because these algorithms are required to (attempt to) distinguish between delay and failure

Relevant Future Works

- Verifying recovery protocols by modeling crash-recovery failures

- Many of the why-not provenance techniques discussed in the paper could assist in implementing LDFI

- Relaxing the synchronicity abstraction

- Explore using the backwards reasoning approach of LDFI to assist in fault-tolerant program synthesis

URL

Lineage-driven Fault Injection