A Brief Introduction to The ChaosMachine for Verification and Analysis of Error-handling in the JVM (updated)

When we build applications, one of our aims should be making them resilient. A good application can sustain its operations in the face of different kinds of failure. The final tests for this don't begin until the application is deployed into a production environment, after which we cannot predict its trials or their results. A new approach is to change our perspective on errors in software systems by not preventing them all the time, but triggering the faults in some controlled situation, learning from the behavior of the application, and finally improving its resilience. To this end, we will design this chaos agent, and the first version will be focused on verification and analysis of error-handling in the JVM.

About Chaos Engineering and Antifragile Software

If you are not familiar with chaos engineering, we provide introductory materials about this technique at the end of this article. Chaos engineering is the practice of experimenting on a distributed system in order to build confidence in the system’s capability to withstand unexpected conditions in production. As for antifragility, it's the antonym of "fragility". Traditional means to combat fragility include: fault prevention, fault tolerance, fault removal, and fault forecasting. However, the contributions of those techniques are insufficient; we propose another perspective on system errors. If we can build mechanisms to let the system experience errors and use those to learn from the failures in a controlled environment, we can build confidence in our system's resilience. The goal of chaos engineering and antifragile design is to perform these perturbations and learn from the experience

A Chaos Engineering System for Live Analysis and Falsification of Exception-handling in the JVM

Updated: We have published a paper together with the sourcecode about this work. If you are interested in it, please see:

- arXiv - A Chaos Engineering System for Live Analysis and Falsification of Exception-handling in the JVM

- GitHub - Repo of ChaosMachine

My current goal is to design a chaos engineering system, called CHAOSMACHINE for verification and analysis of error-handling in a JVM. I wish for this tool to be useful to learn more information about application error handling mechanisms with production traffic, regarding applications as a black box. This agent is designed for Java Virtual Machine and the programming language is Java. The kind of error-handling we engage with are try/catch blocks in Java source code. Our implementation focus will be on the Java agent and Java byte-code, because we believe that the sources and their test suites are usually unavailable in the production environment. This makes the chaos agent a good measure of the system's resilience.

When you deploy a system into production environment, all of its unit tests should have passed. This is not enough to guarantee the system's correctness and robustness; test cases typically only cover failures the author has seen or can predict. They might easily leave some corner cases behind which can become a hidden threat to the production system. We can use the chaos agent to do fault injections in production and observe the behaviors of the system, to help the developers find weaknesses about their application's ability to handle errors and novel conditions. .

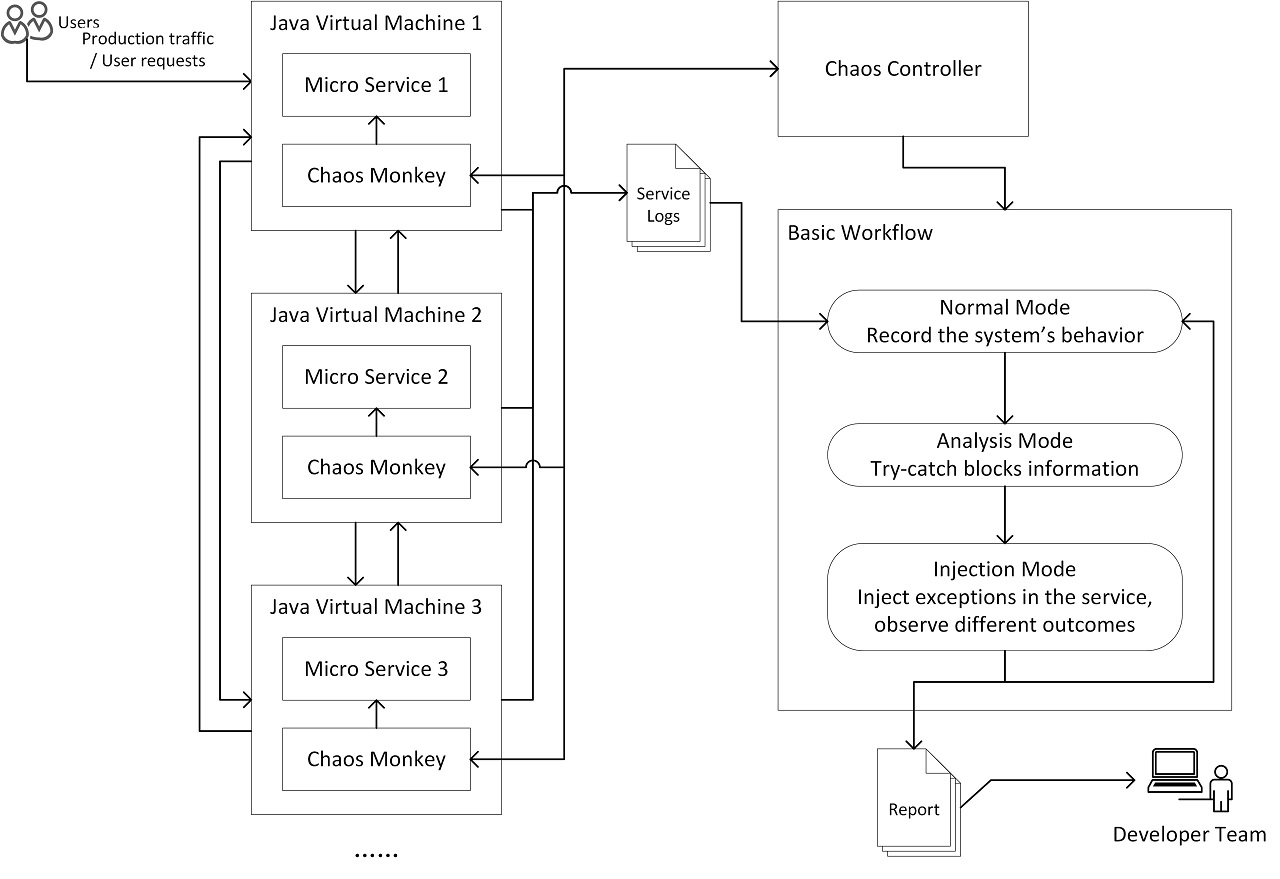

Figure 1: The overview work-flow of the chaos agent

The chaos agent work-flow is composed of six components, as shown in Figure 1.

1) There are two different roles involved in this work-flow: normal users who will randomly generate some requests i.e. production traffic, and members of the developer team who will receive the report generated by the chaos controller.

2) A series of Java Virtual Machines running several micro services, like website rendering, database query, cache query etc. in a website system.

3) The chaos monkey, as a Java agent, is loaded into the JVM together with the micro service. It's responsible for the try catch block registration, and switching the injection code on or off, to change the micro service behaviors under its control.

4) The chaos controller controls the behavior of chaos monkeys, and gathers information to generate a report for the developer team.

5) The service logs contain the main information for the chaos controller to perform analysis. Service logs can include both the micro service's business log and chaos monkey's analysis log.

6) The report is generated by the chaos controller based on several loops of the work-flow, which includes classification information about try-catch blocks covered by current user traffic, and some warnings about high risk try-catch blocks.

It's common to divide larger services into several different containers, in order to make the system more resilient, and to have those multiple micro services cooperate together to support individual user requests. Following chaos engineering practices, we can inject perturbations into the communication between different JVMs, or into a JVM at a low level. We can also deploy chaos monkeys into the services, to do more verification and analysis work on business layers. Using the Java instrumentation interface, we can control java agents and modify classes at runtime.

As shown by Benoit, Lionel and Martin's study of system's resilience against unanticipated exceptions, it's possible to classify try-catch blocks only using production traffic. This is helpful for developers looking to understand which try-catch blocks have a strong influence on their system's steady state when an exception occurs. We call the operation of "injecting a relevant exception in the very beginning of the try block" a short-circuit operation. This try-catch block has to handle the worst error case since the complete try-block is skipped. That's how the chaos monkey perturbs the micro service in this agent. The basic phases are shown as follows:

1) Initialization Phase: In this phase, the application starts with chaos monkey and relevant classes will be loaded into the JVM. As a Java agent, the chaos monkey will transform the classes, detect every try catch block, and inject the chaos byte-code into the beginning of those try blocks.

2) Analysis Phase: Then, the chaos controller will switch all the try catch blocks' chaos modes into "analyze", which means that chaos monkey will begin to log try catch block information. The chaos controller captures these logs and starts analysis, to how many try catch blocks have been covered by users' requests (production traffic), from when the application started to the current time.

3) Fault Injection Phase: After running the analysis mode for a while, the chaos controller switches into a fault injection phase. It will pick one try catch block from the covered ones, turn on its perturbation mode and capture relevant logs. The chaos monkey in this try catch block will throw an exception in the beginning of the try block, which will captured by one of the corresponding catch blocks.

4) Fault Recovery Phase: After capturing enough data for the specific fault injection, the chaos controller will turn off the injection and try to generate a report for developers, indicating whether there are any risks in the relevant try-catch blocks. If some exceptions crash the whole application, the chaos controller should have some mechanisms to recover the application. This should be considered by developers if they plan to apply chaos engineering into their product.

Some Experiments With This Chaos Agent

First of all, I contributed to the Byte-monkey project on GitHub, adding a new "short-circuit" mode to perform the try-catch blocks' perturbations, which is the main part of the chaos monkey in the agent. Since the scope for the project is only to inject different failure modes, I designed other parts of the chaos agent in another project, including features like registering try-catch information, switching to different modes in the runtime, analyzing relevant logs, etc. You can find more details in my forked byte-monkey project here, but the documentation hasn't been finished yet.

Next, we made series of analysis on TTorrent. Ttorrent is a pure-Java implementation of the BitTorrent protocol, providing a BitTorrent tracker, a BitTorrent client and the related Torrent metainfo files creation and parsing capabilities. It is designed to be embedded into larger applications, but its components can also be used as standalone programs. Here are some basic assumptions and constraints:

- There is only 1 tracker server and 1 seeder running without any perturbations.

- We will only run 1 ttorrent client with chaos agent at the same time, the client will download a file from the seeder and exit after downloading the file.

- We will only inject exceptions into 1 specific try block in the client, and the injection stays active for the life of the process.

We aim to determine if we would learn something from the outcomes, to help build our confidence in the system's resilience

What Can We Learn from This?

While performing these tests on TTorrent, we learned that the TTorrent client has different reactions to different fault injections, including:

Whether we can successfully capture injection logs and corresponding error logs from TTorrent client

- How developers handle error logs. Some software catches exceptions and ignore them,others don't long enough details

- Whether the file is still correctly downloaded with this fault injection

- Whether the client can exit normally

Based on different reactions under perturbations, we can classify the try-catch blocks, and make the developer team pay more attention to the ones that would influence the system's steady state.

This method can be used in many different kinds of systems. We will do more experiments on website systems later. This time, there will be a user traffic generator (or real user traffic) in advance, and the chaos controller changes the mode of chaos monkeys in runtime, to dynamically analyze system outcomes. We would study how many try-catch blocks strongly influence user requests when an error occurs, how many try-catch blocks are purely resilient, and if there are any error cases that the developers forgot to take into consideration?

Finally, the idea and design of this chaos agent can be reused to verify and analyze other patterns, like if conditions in the runtime. We can also enrich the monitoring metrics in order to find more useful information. Likewise, we can combine JMX data into the learning phase and might find clues about memory leak risks.

The first thing my supervisor professor Martin Monperrus told me is doing science is a kind of creative work, we should not only verify things, but also explore what we never tried before. And one of the most important keys to achieve that will be building connections. The connection between different researchers, the connection between industry and academics, the connection between different research fields, etc. This is why I am writing this post, hopefully I can share more about my current research work with all of you, and if you have any relevant experience or interests about the following problems, we would love to communicate more in the future!

Acknowledgement

I would like to show my gratitude to my supervisor, Professor Martin Monperrus who has provided me with valuable guidance in every stage of the research work. And I really appreciate Pat Gunn's help on revising this post, to make it much clearer and easier to read!

Useful information about chaos engineering

Principles of Chaos Engineering

A curated list of awesome Chaos Engineering resources on GitHub