[Paper Review] Automating Failure Testing Research at Internet Scale

Short Introduction to This Paper

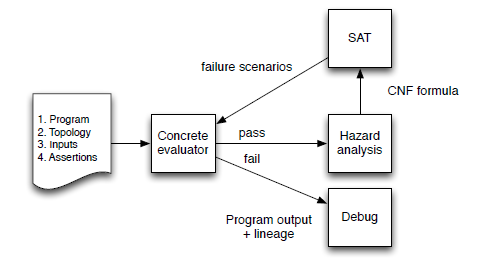

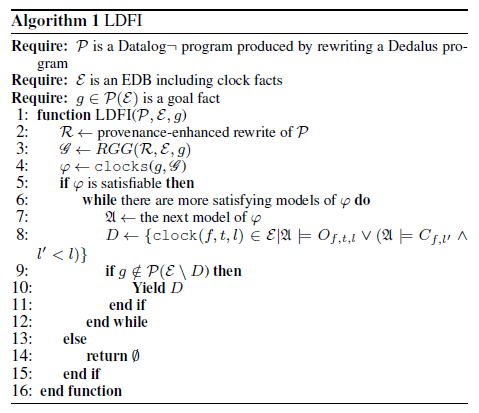

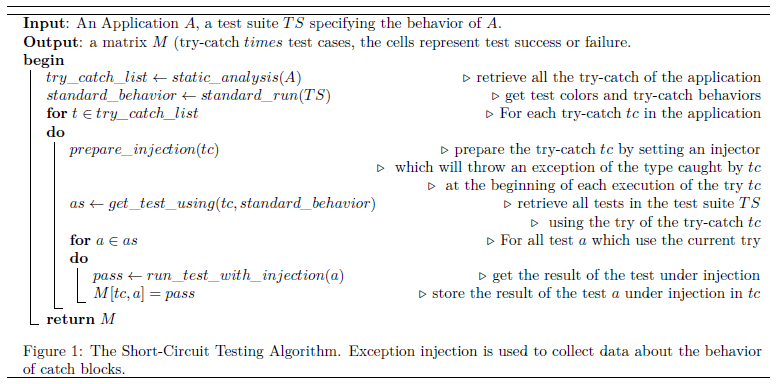

This paper describes how to adapt and implement a research prototype called lineage-driven fault injection (LDFI, another paper view see here) to automate failure testing at Netflix. Along the way, the authors describe the challenges that arose adapting the LDFI model to the complex and dynamic realities of the Netflix architecture. They show how they implemented the adapted algorithm as a service atop the existing tracing and fault injection infrastructure, and present preliminary results.

Highlights of This Paper

- The way of how they adapted LDFI to automate failure testing at Netflix is worth learning, including defining request classes, learning mappings, replay mechanisms.

Key Infomation

- More explanations about LDFI: It begins with a correct outcome, and asks why the system produced it. This recursive process of asking why questions yields a lineage graph that characterizes all of the computations and data that contributed to the outcome. By doing so, it reveals the system’s implicit redundancy by capturing the various alternative computations that could produce the good result.

- About the solutions in the SAT problem: It does not necessarily indicate a bug, but rather a hypothesis that must be tested via fault injection

Implementation

- Training: the service collects production traces from the tracing infrastructure and uses them to build a classifier that determines, given a user request, to which request class it belongs. We found that the most predictive features include service URI, device type, and a variety of query string parameters including parameters passed to the Falcor data platform

- Model enrichment: the service also uses production traces generated by experiments (fault injection exercises that test prior hypotheses produced by LDFI) to update its internal model of alternatives. Intuitively, if an experiment failed to produce a user-visible error, then the call graph generated by that execution is evidence of an alternative computation not yet represented in the model, so it must be added. Doing so will effectively prune the space of future hypotheses

- Experiments: finally, the service occasionally "installs" a new set of experiments on Zuul. This requires providing Zuul with an up-to-date classifier and the current set of failure hypotheses for each active request class. Zuul will then (for a small fraction of user requests) consult the classifier and appropriately decorate user requests with fault metadata

Relevant Future Works

- They used the single-label classifier for the first release of the LDFI service, but are continuing to investigate the multi-label formulation